Driver Fatigue Detection System (Embedded AI project)

This blog presents my Embedded AI project on a Driver Fatigue Detection System. The system is designed to monitor a driver’s alertness in real-time using AI algorithms and embedded sensors. By analyzing facial expressions, eye movements, and other behavioral cues, the system can detect signs of drowsiness or distraction and provide timely alerts to prevent accidents. This project highlights the integration of AI with embedded systems to enhance automotive safety and demonstrates practical applications of machine learning in real-world scenarios.

Neural Network Architecture:

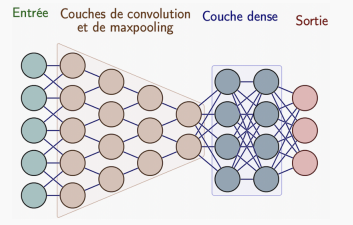

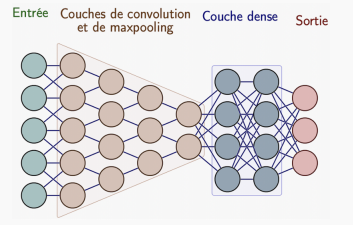

The core of the system is a convolutional neural network (CNN), structured as follows:

Convolutional layers: Extract spatial features from the eyes, such as eyelid shape, position, and patterns indicating fatigue. Filters in each layer learn to detect relevant features automatically.

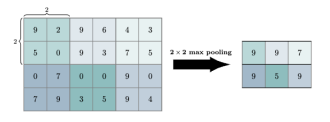

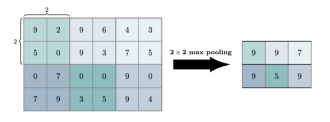

Max-pooling layers: Reduce the spatial dimensions of feature maps, keeping the most important information while reducing computational load.

Dropout layers: Randomly deactivate neurons during training to prevent overfitting and improve generalization.

Fully connected layers (perceptrons): Combine extracted features to classify the driver’s state as alert or drowsy.

What I Learned from the Project

This project was an incredible learning experience, both technically and conceptually. Before this one, I had worked on several other projects throughout the year, including projects focused on physics, thermodynamics, applied mathematics, and statistics. However, this Driver Fatigue Detection System stood out as the most enjoyable and impactful.

I gained hands-on expertise with popular AI and computer vision libraries such as TensorFlow and Keras, which I used to design, train, and optimize the neural network, and OpenCV, which allowed me to process images and extract relevant features like eye regions and facial landmarks. Working with these libraries taught me how to efficiently handle real-time video data, perform image pre-processing, and implement deep learning pipelines.

I also strengthened my skills in object-oriented programming (OOP) with python, a practice I started at a young age, well before joining INSEP. OOP proved essential in this project for organizing complex code, creating modular and reusable components, and managing interactions between different parts of the program, such as data preprocessing, model training, and real-time prediction. This structure made it easier to debug, extend, and maintain the project as it became more complex.

Beyond the technical tools, I learned how to tackle a complete AI project from start to finish: collecting and preparing data, designing and training neural networks, evaluating performance using metrics like accuracy and confusion matrices, and deploying the system for real-time use. I also developed a better understanding of the challenges in human-machine interaction, especially in safety-critical applications like driver monitoring, where precision and reliability are crucial.

This project not only improved my technical skills but also inspired me to pursue a career in artificial intelligence, shaping my professional goals and interests from that point onward.

Driver Fatigue Detection System (Embedded AI project)

This project focuses on developing a Driver Fatigue Detection System to monitor a driver’s alertness in real-time and prevent accidents caused by drowsiness. The system uses AI to detect signs of fatigue, such as drooping eyelids, yawning, or changes in facial expressions.

Data Preparation:

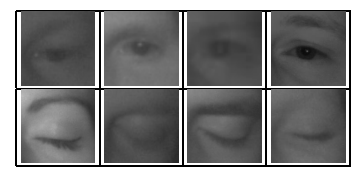

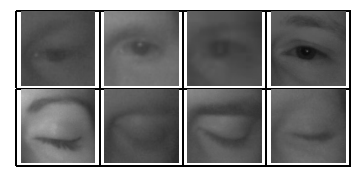

To train the model, I collected a dataset of driver eye images labeled as open or closed. The dataset included variations in lighting conditions, eye colors, eye shapes, and different levels of eyelid openness and closure. Pre-processing steps included resizing images, normalizing pixel values, and augmenting the dataset with rotations, flips, and brightness adjustments to increase diversity and robustness. Proper pre-processing ensures that the neural network can generalize well to different drivers, lighting conditions, and camera angles.

Training Process:

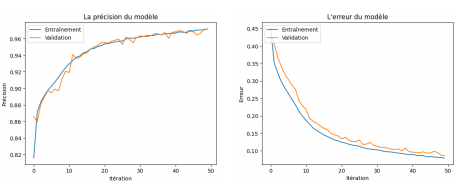

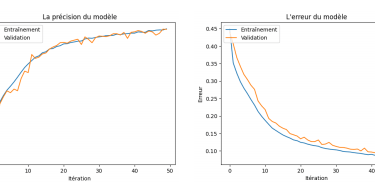

The model was trained using labeled images with a cross-entropy loss function, which measures the difference between predicted and true labels. The Adam optimizer was used to adjust the network weights efficiently during training. Regular evaluation on a validation set allowed monitoring for overfitting and ensuring the model generalized well.

Figure : Convolutional neural network

Figure : Maxpooling Layers

Model Evaluation:

Performance was assessed using multiple metrics:

Accuracy: The proportion of correctly classified images in both the training and validation sets.

Loss: The cross-entropy loss value provided insight into how well the model predicted the correct classes.

Confusion Matrix: Allowed detailed analysis of false positives (alert predicted as drowsy) and false negatives (drowsy predicted as alert), helping refine the model and improve reliability in real-world scenarios.

Once trained, the system can analyze real-time video from a driver’s camera and predict fatigue levels, triggering alerts if necessary. Visualization tools were implemented to monitor predictions and track model performance live.

For more technical information, you will find this PDF as a support.

This project demonstrates the integration of embedded systems and deep learning to enhance driver safety, showing how AI can detect early signs of fatigue and prevent potential accidents.

Figure : model accuracy and error